Table of Contents

- The Power of EXPLAIN in PostgreSQL

- Sequential Scan: The Fundamental Table Access Method

- Summary

- Next Steps

- References

The Power of EXPLAIN in PostgreSQL

When optimizing database performance, it’s essential to understand how your database executes queries. PostgreSQL provides the powerful EXPLAIN command that reveals the execution plan for your queries, helping you identify potential bottlenecks and optimization opportunities.

What is EXPLAIN?

The EXPLAIN command shows how PostgreSQL will execute your query. It reveals:

- How tables will be scanned (sequential scan, index scan, etc.)

- What join methods will be used for multiple tables

- Cost estimates and other performance metrics

Here’s how to use it:

EXPLAIN SELECT * FROM users;

QUERY PLAN------------------------------------------------------------- Seq Scan on users (cost=0.00..258.00 rows=5000 width=244) ^ ^ ^ ^ | | | | startup total output average cost cost rows row sizeUnderstanding the EXPLAIN Output

Let’s break down each component:

- Startup cost (0.00): Estimated computational units needed before the first row can be returned

- Total cost (258.00): Estimated computational units for the entire query execution

- Rows (5000): Estimated number of rows the operation will return

- Width (244): Estimated average size of each row in bytes

PostgreSQL’s Cost Model Explained

PostgreSQL uses a cost-based optimizer that evaluates different execution plans and selects the one with the lowest estimated cost.

The Unit of Cost

The baseline unit for cost is “the effort to fetch a single page sequentially.” This provides a reference point for all other operations:

-- Key cost parameters that influence planning decisionsseq_page_cost = 1.0 -- Cost of sequential page fetch (baseline)random_page_cost = 4.0 -- Cost of random page fetchcpu_tuple_cost = 0.01 -- Cost of processing a single rowcpu_index_tuple_cost = 0.005 -- Cost of processing an index entrycpu_operator_cost = 0.0025 -- Cost of processing an operator

-- You can adjust these parameters for your environmentSET random_page_cost = 2.0; -- Common setting for SSD storageSHOW random_page_cost; -- Verify the changeUnderstanding Page Costs: Sequential vs Random Access

Think of PostgreSQL’s cost model like a librarian deciding the fastest way to find books:

Sequential Page Cost (seq_page_cost = 1.0)

Imagine walking along a bookshelf, checking each book in order. This is a sequential scan - it’s predictable and consistent. PostgreSQL assigns this a baseline cost of 1.0 unit.

Random Page Cost (random_page_cost = 4.0)

Now imagine having to jump around the library, consulting the catalog and fetching books from different sections. This random access takes more effort! By default, PostgreSQL considers random access to be 4 times more expensive than sequential access.

Note: Cost values are unitless and relative, with 1.0 representing the baseline cost of a sequential page read.

Why This Matters

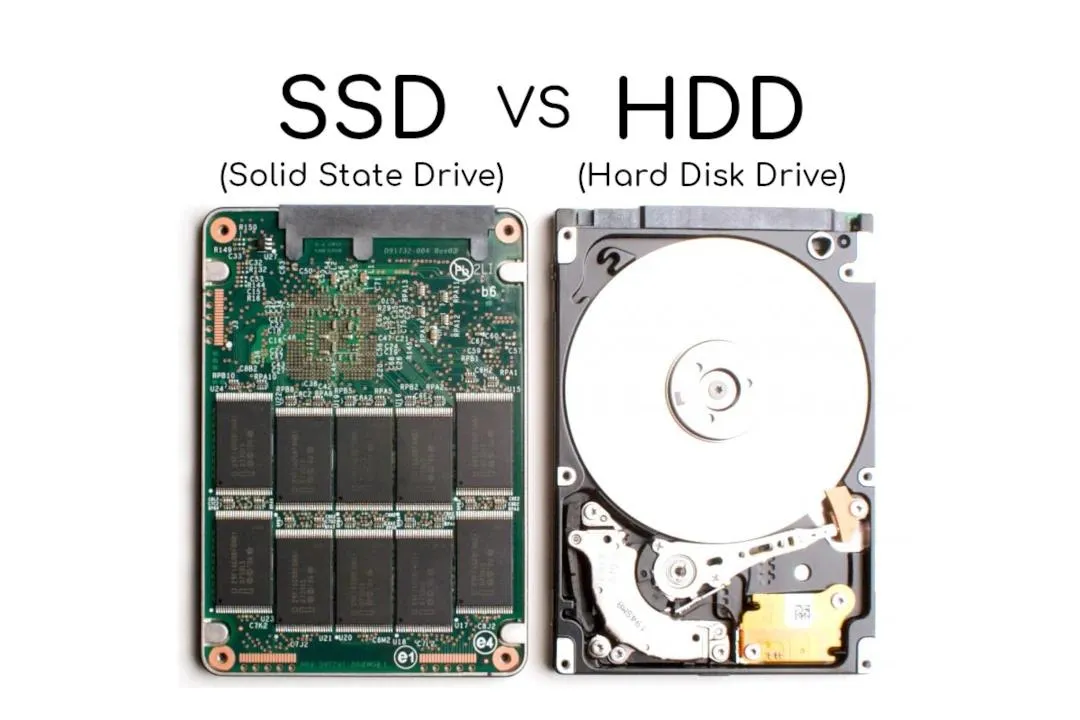

These cost settings determine when PostgreSQL chooses indexes:

- With HDDs (traditional hard drives): Random access is genuinely slower (heads must physically move)

- With SSDs: Random access is almost as fast as sequential access

SSD has no moving parts, so random access is almost as fast as sequential access.

For modern SSDs, many DBAs adjust the random_page_cost much lower (often to 1.1-2.0), which encourages PostgreSQL to use indexes more frequently, potentially improving performance.

How PostgreSQL Makes Planning Decisions

The query planner evaluates multiple potential execution plans by:

- Estimating the number of rows that would match the query conditions

- Calculating the cost of different access methods (sequential scans, index scans, etc.)

- Considering table statistics and data distribution

- Selecting the plan with the lowest total estimated cost

Sequential Scan: The Fundamental Table Access Method

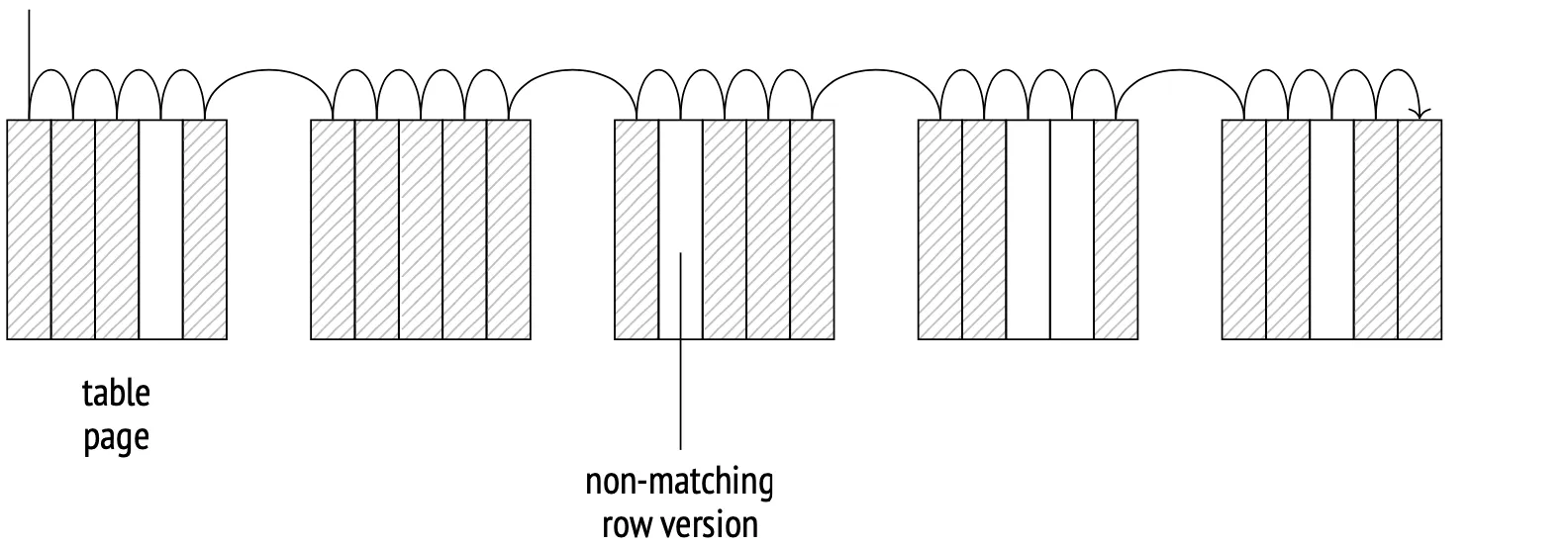

A sequential scan reads the entire table from start to finish, examining every row.

EXPLAIN SELECT * FROM users;

QUERY PLAN------------------------------------------------------------- Seq Scan on users (cost=0.00..258.00 rows=5000 width=244)When Sequential Scan is Used

PostgreSQL will choose a sequential scan when:

- Small Tables: When a table is small enough that reading the entire table is faster than using an index

- Large Result Sets: When a query is expected to return a significant portion of the table (typically >5-10%)

- No Suitable Indexes: When no indexes exist that could help with the query

- Full Table Operations: For operations that need to process every row (like

COUNT(*)without conditions)

Advantages of Sequential Scan

- Simplicity: No index lookup overhead

- Predictable I/O Pattern: Reads data in physical order, which is efficient for disk I/O

- Memory Efficiency: Can benefit from OS-level read-ahead caching

- No Index Maintenance: Doesn’t require indexes to be maintained

Disadvantages of Sequential Scan

- Inefficient for Large Tables: Reading millions of rows when you only need a few is wasteful

- Slower for Selective Queries: When you need only a small subset of data, indexes usually perform better

- Resource Intensive: Consumes more I/O, CPU, and memory when scanning large tables

Optimizing Sequential Scans

- Increase

effective_cache_size: Helps PostgreSQL estimate available system memory for caching - Adjust

work_mem: Provides more memory for sorting operations during scans - Consider table partitioning: Reduces the amount of data scanned for queries that can target specific partitions

- Use parallel sequential scans: Set

max_parallel_workers_per_gatherto utilize multiple CPU cores

Summary

The EXPLAIN command is your window into PostgreSQL’s decision-making process. By understanding how the query planner evaluates costs and chooses execution plans, you can make informed decisions about:

- When to create indexes

- How to structure your queries

- How to configure PostgreSQL for your hardware

- When sequential scans are appropriate and when they should be avoided

Next Steps

- Learn more about how data is stored on disk

- Explore index scans and when they’re preferred over sequential scans

- Discover other types of scans (bitmap scans, index-only scans)

- Master techniques to optimize your queries for better performance